Hadoop Package

This page describes how to use the hadoop package in VisTrails. This package works in Mac and Linux.

Installation

Install vistrails

Get vistrails using git and check out a version supporting the hadoop package:

git clone http://vistrails.org/git/vistrails.git cd vistrails git checkout 976255974f2b206f030b2436a5f10286844645b0

If you are using a binary distribution of vistrails you should replace the vistrails folder in that with this one.

Install BatchQ-PBS and the RemotePBS package

This python package is used for communication over ssh. Get it with:

git clone https://github.com/rexissimus/BatchQ-PBS

Copy BatchQ-PBS/batchq to your vistrails python installations site-packages folder.

Copy BatchQ-PBS/batchq/contrib/vistrails/RemotePBS to ~/.vistrails/userpackages/

Install the hadoop package

git clone git://vgc.poly.edu:src/vistrails-hadoop.git ~/.vistrails/userpackages/hadoop

Modules used with the hadoop package

Dialogs/PasswordDialog

Used to specify a password to the remote machine

Remote PBS/Machine

Represents a remote machine running SSH.

- server - the server url

- username - the remote server username, default is your local username

- password - your password, connect the PasswordDialog to here

- port - the remote ssh port, set to 0 to use the default port

Connecting to the Poly cluster through vgchead

The hadoop job submitter runs on gray02.poly.edu. If you are outside the poly network you need to use a ssh tunnel to get through the firewall.

Add this to ~/.ssh/config:

Host vgctunnel HostName vgchead.poly.edu LocalForward 8101 gray02.poly.edu:22

Host gray02 HostName localhost Port 8101 ForwardX11 yes

Set up a tunnel to gray02 by running:

ssh vgctunnel

In vistrails, create a Machine module with host=gray02 and port=0. Now you have a connection that can be used by the hadoop package

HadoopStreaming

Runs a hadoop job on a remote cluster.

- CacheArchive - Jar files to upload

- CacheFiles - Other files to upload

- Combiner - combiner file to use after mapper. Can be same as reducer.

- Environment - Environment variables

- Identifier - A unique string identifying each new job. The job files on the server will be called ~/.vistrails-hadoop/.batchq.%Identifier%.*

- Input - The input file/directory to process

- Mapper - The mapper program (required)

- Output - The output directory name

- Reducer - The reducer program (optional)

- Workdir - The server workdir (Default is ~/.vistrails-hadoop)

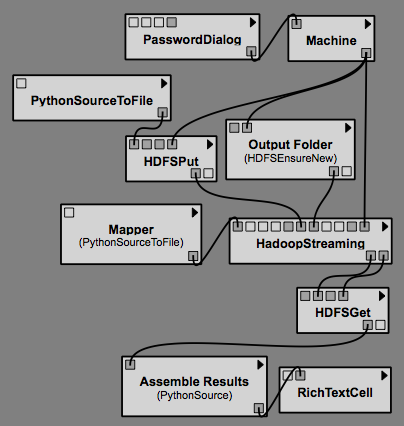

Example workflow:

HDFSEnsureNew

Deletes file/directory from remote HDFS storage

HDFSGet

Retrieve file/directory from remote HDFS storage. Used to get the results.

- Local File - Destination file/directory

- Remote Location - Source file/directory in HDFS storage

HDFSPut

Upload file/directory to remote HDFS storage. Used to upload mappers, reducers and data files.

- Local File - Source file/directory

- Remote Location - Destination file/directory in HDFS storage

PythonSourceToFile

PythonSource that is written to a file. Used to create mapper/reducer files.

URICreator

Creates links to locations in HDFS storage for input data and other files