Difference between revisions of "Assignment 3 - MapReduce algorithm design"

| Line 47: | Line 47: | ||

b. The result files: rfstripes.txt, rfpairs.txt, rfcomp.txt | b. The result files: rfstripes.txt, rfpairs.txt, rfcomp.txt | ||

''Note that you have to output '''only the top 100 word pairs''' sorted by decreasing order of relative frequency.'' | ''Note that you have to output '''only the top 100 word pairs''' sorted by decreasing order of relative frequency.'' | ||

Revision as of 18:07, 6 April 2014

Assignment 3: Computing Relative Frequencies

Dataset description

For this assignment you will explore a set of 100,000 Wikipedia documents:

- s3://cs9223/wikitext_100k.txt, or

- https://s3.amazonaws.com/cs9223/wikitext_100k.txt

Each line in this file consists of the plain text extracted from a Wikipedia document.

Task

Compute the relative frequencies of each word that occurs in the documents in wikitext_100k.txt and output the top 100 word pairs sorted by decreasing order of relative frequency.

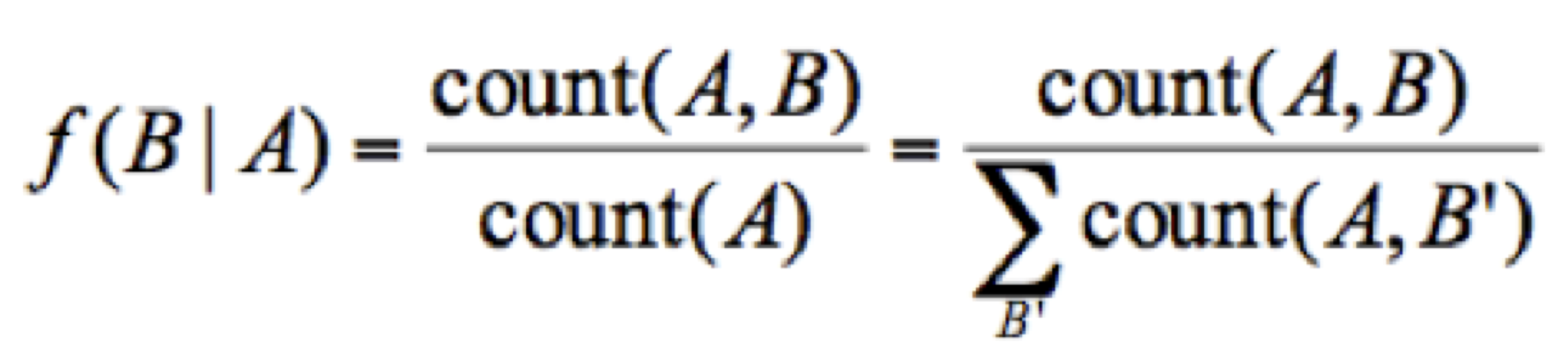

Recall that the relative frequency (RF) of word B given word A is defined as follows:

where count(A,B) is the number of times A and B co-occur in a document and count(A) the number of times A occurs with anything else. Intuitively, given a document collection, the relative frequency captures the proportion of time the word B appears in the same document as A. (See Section 3.3, in Data-Intensive Text Processing with MapReduce).

In the lecture on March 10th, you learned different approaches to do this, and in this assignment, you will implement them:

a. Write a mapreduce program which uses the Stripes approach and writes its output in a file named rfstripes.txt

b. Write a mapreduce program which uses the Pairs approach and writes its output in a file named rfpairs.txt

c. Compare the performance of the two approaches and output the relative performance to a file named rfcomp.txt. Compute the relative performance as follows: (running time for Pairs/ running time for Stripes). Also include an analysis comparing the communication costs for the two approaches.

Requirements

You can write your program using Java or Python (with Hadoop streaming) and you should run it on AWS. It is a good idea to develop and test your program on a local machine (or on the NYU Hadoop cluster) before deploying on AWS. In addition to the source code, you will submit a text file that contains the commands you used to run each Mapreduce job (i.e., pairs and stripes) -- include the Hadoop streaming commands you used to run your jobs.

1. readme.txt must include all the commands you used to run your code and produce the required results.

2. All files needed to execute these commands must be stored in the same directory.

3. Your code should both read from and write to Amazon S3 directly. In other words, input_directory and output_directory should be in S3.

4. You must use Hadoop version 1.0.3.

When and What to submit

Your assignment is due on April 6th, 2014 at 11:59pm. All the required files should be compressed in one ZIP file and submitted to NYU Classes. The ZIP file should contain:

a. Source code and readme.txt (For Java, in addition to the source, you must include the JAR file)

b. The result files: rfstripes.txt, rfpairs.txt, rfcomp.txt

Note that you have to output only the top 100 word pairs sorted by decreasing order of relative frequency.

c. A plain-text document named rf.txt that describes

- the input/output format in each Hadoop task, i.e., the keys for the mappers and reducers

- the Hadoop cluster settings you used, i.e., number of mappers and reducers

- the running time for each approach: pairs and stripes

Note: all files must be in the same directory